Web Scraping Using Beautiful Soup Word Cloud + Python – Tutorial Part 2

Web Scraping Using Beautiful Soup:

In our previous article, we understood what is Web Scraping? why Web Scraping? Different ways for web scraping and step by step tutorial of Web Scraping using Beautiful Soup.

What to do with the data we scraped?

There are several actions that can be performed to explore the data collected in the excel sheet after web scraping of the opencodez website. In order to extend our learning to what all can be done with the structured data extraction, here we will try explaining 2 interesting and powerful topics. First is wordcloud generation and the other concept which we will introduce is Topic Modeling which comes under the umbrella of NLP.

Word Cloud

1) What is Word cloud:

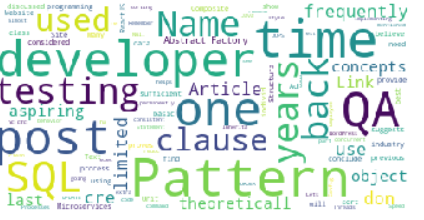

It is a visual representation that highlights the high-frequency words present in a corpus of text data after we have removed the least important regular English words called stopwords including other alphanumeric letters from the text.

2) Use of word cloud:

It is an interesting way to look at the text data and gain useful insight instantly without reading the whole text.

3) Required tools and knowledge:

- Python

- Pandas

- wordcloud

- matplotlib

4) A summary of Code:

In the web scraping code provided in the last article, we do have created a data frame named df using the pandas library and exported this data in a CSV. In this article, we will consider the excel data as input data afresh and start our code in a new manner from here. We will only focus on the column named Article_Para which consists of the most text data. Next, we will convert the desired Article_Para column into a string form and apply the wordcloud function on the text with various parameter inputs. Finally, we will plot the wordcloud using the matplotlib library.

5) Code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

#import pandas, wordcloud, matplotlib libraries import pandas as pd from wordcloud import WordCloud, STOPWORDS import matplotlib.pyplot as mplot #Read the csv file and change the Article_Para into string type df = pd.read_csv('/content/OpenCodez_Articles.csv', index_col=0) para_docs=(df.Article_Para.astype('str')) #implement the wordcloud function on the text data oc_cloud= WordCloud(background_color='white',stopwords= STOPWORDS, max_words=100, max_font_size=50,random_state=1).generate(str(para_docs)) #Generate the wordcloud output mplot.imshow(oc_cloud) mplot.axis('off') mplot.show |

6) Explanation of some terms used in the code:

Stopwords are general words which are used in sentence creation. These words do not generally add any value to the sentence and do not help us gain any insight. For example A, The, This, That, Who etc. We will remove these words from our analysis using the STOPWORDS as a parameter in the Wordcloud function. mplot.axis(‘off’) is mentioned to disable the axis display in the word cloud output

7) Word cloud output:

8) Reading the output:

The prominent words are QA, SQL, Testing, Developer, Microservices, etc which provides us with information about the most frequently used words in the Article_Para of the data frame. This gives instant insight into the articles which we can expect to be available including various other concepts.

Topic Modeling

1) What is Topic Modeling:

This is a topic that falls under the NLP concept. Here what we do is try to identify various flavors of the topic that exists in our corpus of text or documents.

2) Use of Topic Modeling:

Its use is in identifying what all topic flavors are available in a particular text/document. For instance, on the IMDB web page of Troy we have got say around 5000+ reviews. If we collect all the available reviews and perform a topic modeling on those review text input, we will get several flavors identified which can instantly help us understand what all colors the movie Troy can provide. Like heroics of

Achilles, the tragic end of Hector, etc. In our opencodez.com text data input, we can recognize the various types of topics that the various articles provide to the readers.

3) Required tools and knowledge:

- Python

- Pandas

- gensim

- NLTK

4) A Summary of Code:

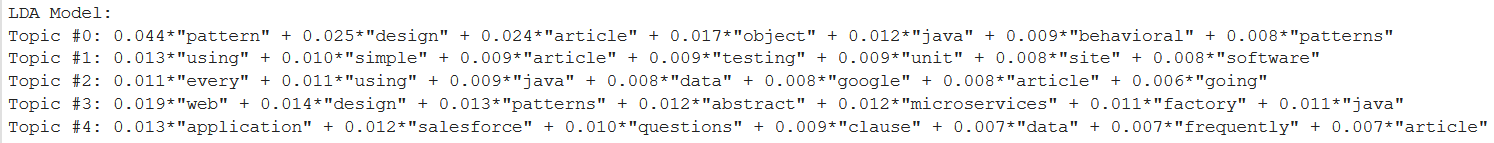

We are going to incorporate the LDA ( Latent Dirichlet Allocation) for Topic Modelling for which we will use the gensim library. NLTK library is the Natural Language Toolkit which will be used to clean and tokenize our text data. By tokenization, we break our string sequence of text data into separate pieces of words, punctuations, symbols, etc. The tokenized data is next converted to a corpus. (The LDA concept is beyond the scope of this article.) We create an LDA model on the corpus using the gensim library to generate topics and print them to see the output.

5) Code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

# Import the pandas library, # Read the csv and convert the desired Article_Para column into string type import pandas as pd df = pd.read_csv('/content/OpenCodez_Articles.csv', index_col = 0) para_docs = df.Article_Para para_docs = (df.Article_Para.astype('str')) # Import re, nltk libraries and the stopwords feature. List of stopwords has been extended by adding some words on our own. import re import nltk nltk.download('punkt') nltk.download('stopwords') from nltk import word_tokenize from nltk.corpus import stopwords STOPWORDS = stopwords.words('english') newStopWords = ['one', 'like', 'used', 'see'] STOPWORDS.extend(newStopWords) # Define the clean text function def clean_text(text): tokenized_text = word_tokenize(text.lower()) cleaned_text = [x for x in tokenized_text if x not in STOPWORDS and re.match('[a-zA-Z\-][a-zA-Z\-]{2,}', x)] return cleaned_text # Create a list of words to be tokenized tokenized_data = [] for text in para_docs: tokenized_data.append(clean_text(text)) # Perform tokenization after converting all the words into lower case from nltk.tokenize import word_tokenize gen_docs = [[w.lower() for w in word_tokenize(text)] for text in para_docs] # We import gensim, # Create a dictionary from the tokenized data. Next create corpus using the 'bag of words' technique(doc2bow) import gensim dictionary = gensim.corpora.Dictionary(tokenized_data) corpus = [dictionary.doc2bow(gen_doc) for gen_doc in tokenized_data] # Perform the LDA model on the corpus of data and create as many topics as we need from gensim import models, corpora lda_model = models.LdaModel(corpus = corpus, num_topics = 5, id2word = dictionary) print("LDA Model:") for i in range(10): print("Topic #%s:" % i, lda_model.print_topic(i, 7)) |

6) Reading the output:

We can change the value in parameters to get any number of topics or the number of words to be displayed in each topic. Here we have wanted 5 topics with 7 words in each topic. We can observe that the topics are related to java, salesforce, unit testing, microservices. If we increase the number of topics to let say 10, we can find out other flavors of existing topics as well.

Conclusion:

In this two-article series we have taken you through Web Scraping, how it can be used for data collection, interpretation and in the end, effective ways to present the data analysis. Access to such data helps make informed decisions.

I hope you find the information useful. Please do not hesitate to write comments/questions. We will try our best to answer.